Note that this is mostly just stream-of-consciousness thinking…there’s nothing immediately valuable/learnable here.

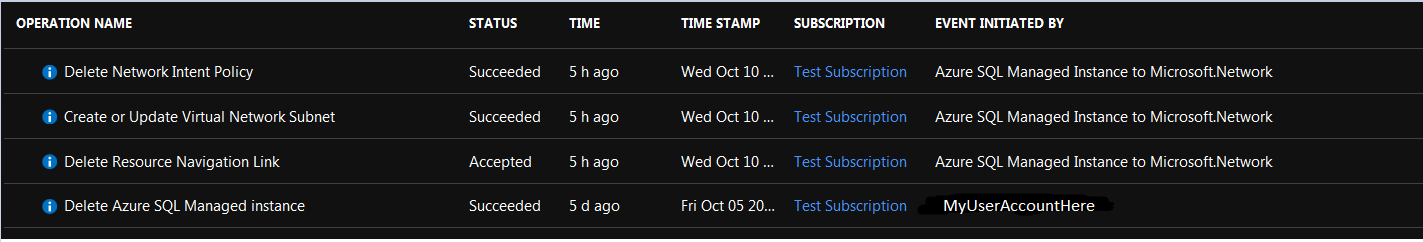

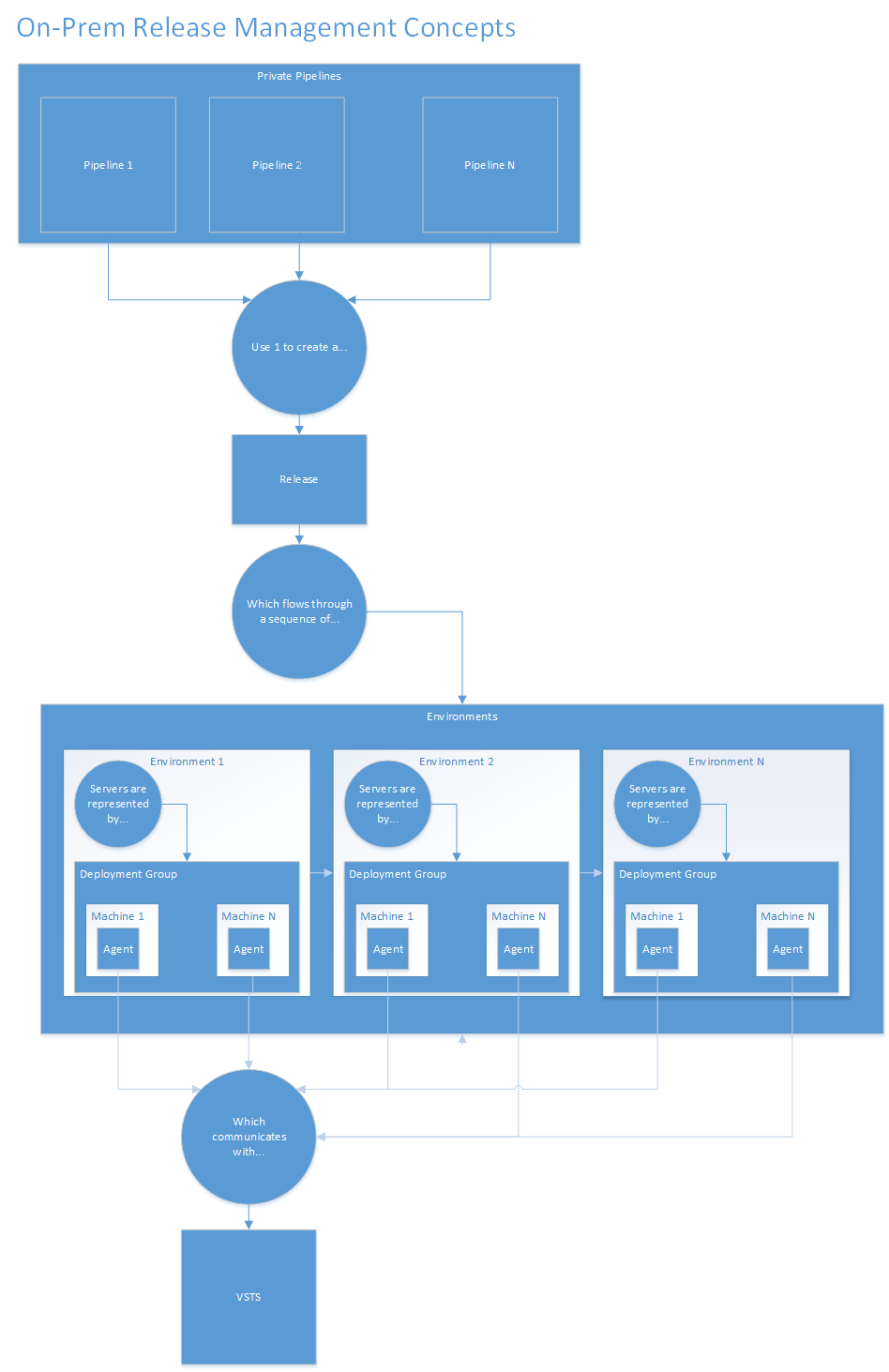

Ops

I’m currently transitioning from a pure software-development position to a DevOps position. From the research/practice I’ve been doing, a big part of DevOps is defining your infrastructure as code. So rather than buying a physical server, putting in a Windows Server USB stick, clicking through the installer, and then manually installing services/applications, you just write down the stuff that you want in a text file. Then a program analyzes that file and “makes it so”.

As a result, you can easily create an unlimited number of machines with the same configuration. The system has several dependencies (such as SQL Server, IIS, etc). By making those dependencies explicit in a file, a whole new range of capabilities opens up – no longer do you have to click-click-wait 5 minutes, etc in order to construct the system. It’s all automatic.

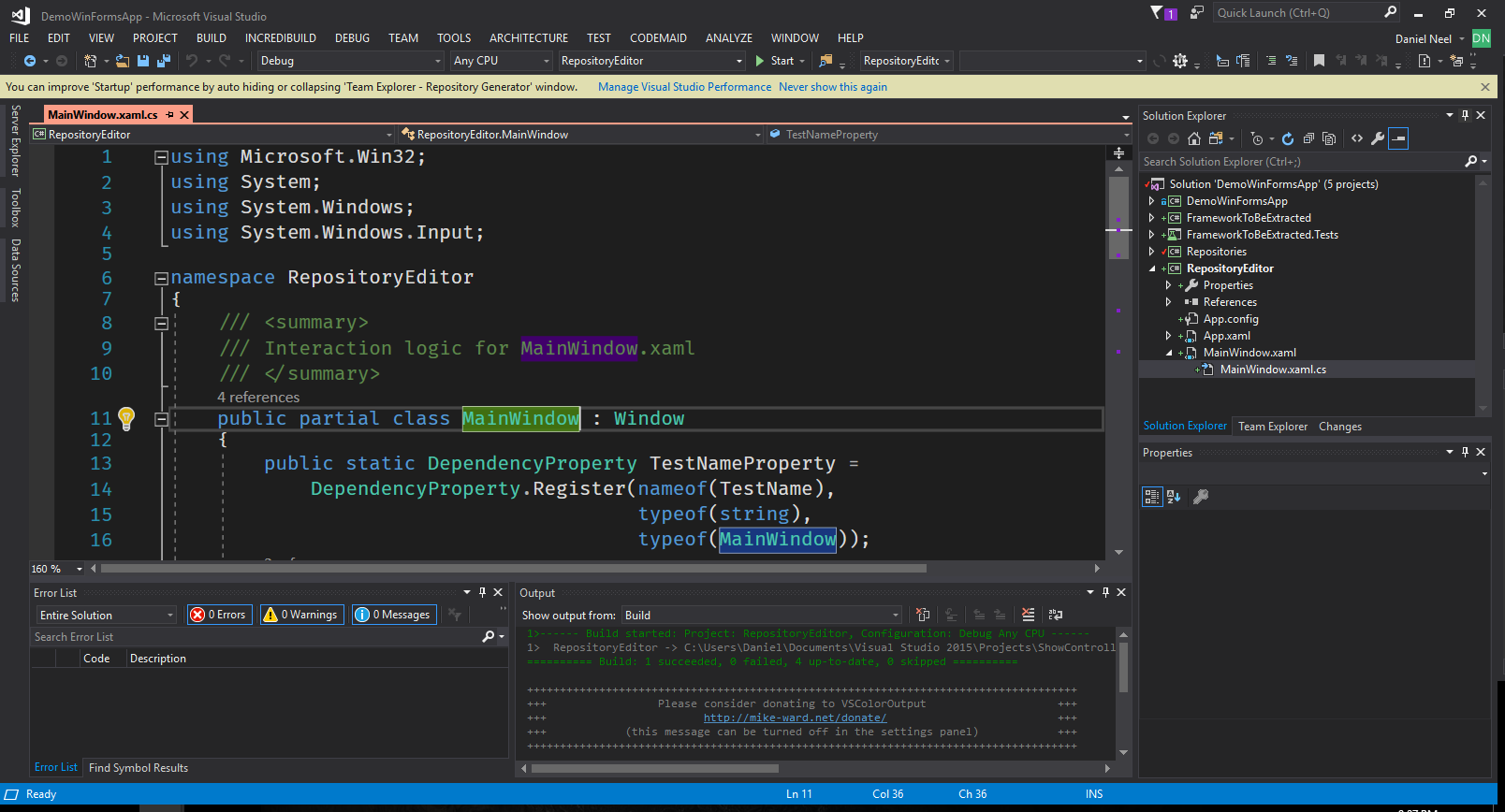

Dev

In software development, dependency injection is a really useful technique. It helps on the path to making a software system automatically testable. It allows the application to be configured in one place. Combining dependency injection with making your code depend on interfaces, it’s easy to swap in/out different components in your system, such as mock objects. Ultimately, this means that the system is much easier to test by a 3rd party. Injecting dependencies throughout the application exposes several “test points” that can be used to modify components of the system without having to rewrite it.

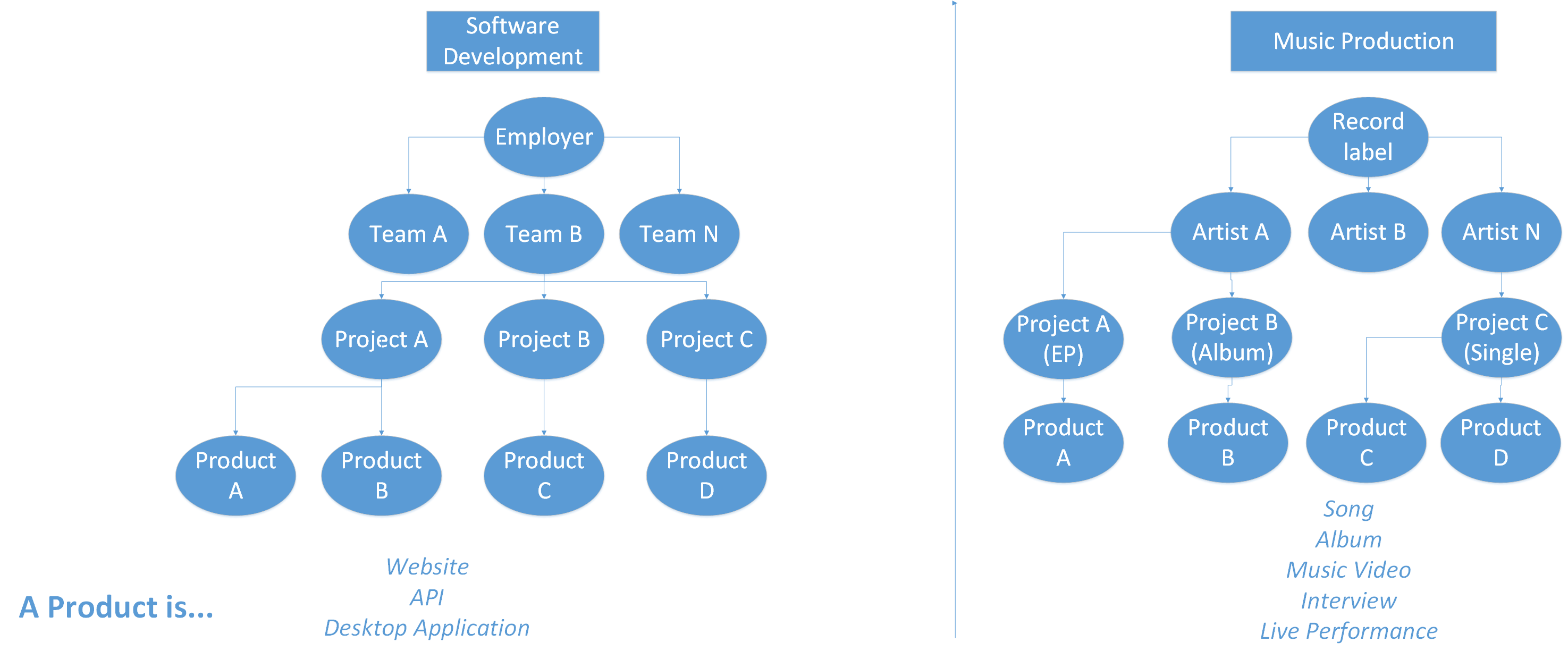

Project management?

I’ve never worked in project management, but projects do have dependencies. “For task X to be complete, task Y has to be completed first.” What would centralized management of a project’s dependencies look like?

So all this brings to mind a few thoughts/questions…is there any kind of “dependency theory” in the world? Clearly dependencies are important when producing things. If there existed a general theory of dependencies, could we create tools that help us manage dependencies across all levels of a project, rather than keeping infrastructure dependencies in one place, project dependencies in another, and code dependencies in another? The pattern I’m observing so far is that (at least across devops and software dev) it’s a Good Thing to centralize your dependencies in a single location. Doing so makes your application/server much more easily configurable.

I don’t have any answers…interesting to think on, though. Maybe I’ll write a followup later on after some more time stewing on the topic.